Across all versions of iOS, Apple adds new accessibility features and improves upon others, and iOS 14 is no exception. The Magnifying Glass tool, designed for the blind, is new to iOS 14.

Already in its day we have dedicated a complete article to the Magnifier in iOS 13 that you can read to learn in depth how to use the tools and filters.

Updated interface

The app has seen its interface updated, so that its controls are more visible and offer more clarity on what each one does. You have options to adjust the brightness and contrast or add a filter to apply a color to what is zoomed which makes it easier for the person to see.

All controls can be repositioned to suit individual needs and the filter options can be customized so you can select the one that gives you the best results and can be activated with a single tap.

In dimly lit environments, the flashlight can be activated with a single touch and the magnification level can be easily controlled with a dial. Many of these functions were already available in previous versions, as we mentioned in the article we linked at the beginning, but there are some new features that we are going to review.

Multiple shots

With the new multi-shot option, Lupa users can take multiple photos at the same time, capturing, for example, different parts of a sheet and reviewing them all at once, instead of having to take photos one at a time. one erases the old one.

To activate it, you have to tap in the lower right corner, where the two overlapping squares are.

Add Magnifying Glass to Home Screen

Regular Lupa users can now add the app icon to the home screen (previously it could have been added to the Control Center).

To do this, you need to go to the App Library, search for Magnifier and add it to the Home screen.

It can also be added to the Magnifier by triple-clicking the iPhone side button.

For the magnifying glass to appear in the app library, it needs to be turned on, for which you need to go to Accessibility> select Magnifier and tap the switch to turn it on.

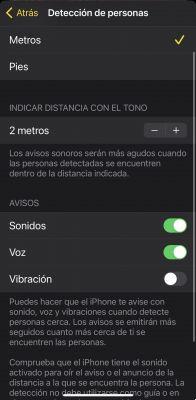

People detection

The people detection option uses augmented reality and machine learning to detect the distance between humans and objects.

The purpose of the human detection option is to help the blind and visually impaired to move.

It uses the iPhone 12 Pro's LiDAR scanner (so it will only be available on those iPhones that have that sensor) to help the user understand their surroundings by finding out how many people are in the checkout line or the distance to the edge of the box, the subway platform or what seat is free at the table, for example. Especially in this age of social distance, the software can tell you if you are within 2 meters (or the distance that has been configured in the setting preferences) to keep you safe.